In ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia 2022)

Received the Best Paper Award at SIGGRAPH Asia 2022

Teaser

|

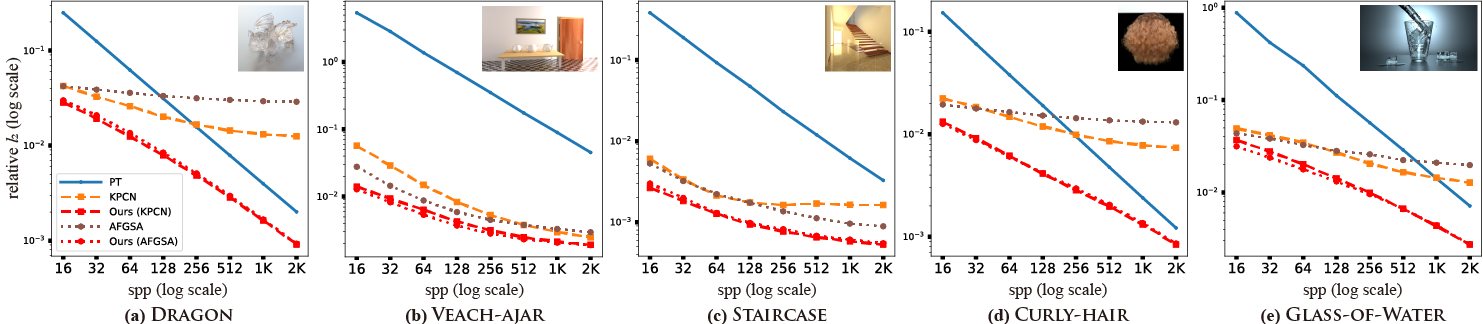

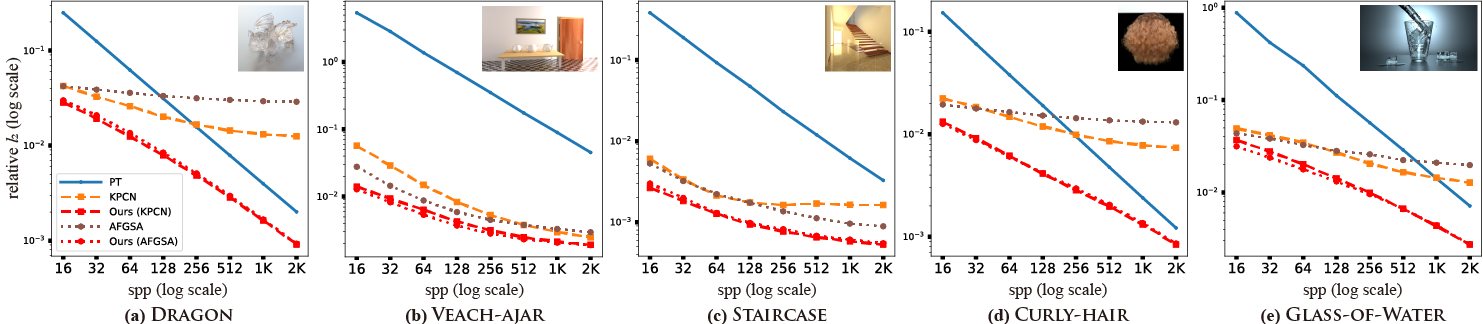

Numerical convergence of the learning-based denoisers, KPCN [Bako et al. 2017] and AFGSA [Yu et al. 2021], with and without our technique.

We report the relative l2 errors [Rousselle et al. 2011] of the tested techniques from 16 to 2K samples per pixel (spp).

The state-of-the-art denoisers show much lower errors than their input, i.e., path tracing (PT), for small sample counts (e.g., 16),

but their improvements become minor (c) or disappear ((a), (d), and (e)) for larger sample counts due to their slow convergence rates, except for the Veach-Ajar that contains fireflies.

Our technique helps the denoisers have lower errors than their unbiased inputs, and this dominance property leads to significantly improved convergence rates of the input denoisers.

|

Abstract

|

Unbiased rendering algorithms such as path tracing produce accurate images given a huge number of samples, but in practice,

the techniques often leave visually distracting artifacts (i.e., noise) in their rendered images due to a limited time budget.

A favored approach for mitigating the noise problem is applying learning-based denoisers to unbiased but noisy rendered images

and suppressing the noise while preserving image details. However, such denoising techniques typically introduce a systematic error,

i.e., the denoising bias, which does not decline as rapidly when increasing the sample size, unlike the other type of error, i.e., variance.

It can technically lead to slow numerical convergence of the denoising techniques. We propose a new combination framework built upon the James-Stein (JS) estimator,

which merges a pair of unbiased and biased rendering images, e.g., a path-traced image and its denoised result.

Unlike existing post-correction techniques for image denoising, our framework helps an input denoiser have lower errors than its unbiased input

without relying on accurate estimation of per-pixel denoising errors. We demonstrate that our framework based on the well-established JS theories

allows us to improve the error reduction rates of state-of-the-art learning-based denoisers more robustly than recent post-denoisers.

|

Contents

Copyright Disclaimer

This is the author's version of the work. It is posted here for your personal use. Not for redistribution.

© 2022 ACM. Personal use of this material is permitted. Permission from ACM must be obtained for all other uses, in any current or future media,

including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution

to servers or lists, or reuse of any copyrighted component of this work in other works.

Citation (Bibtex)

@article{Gu:2022:NJS,

author = {Jeongmin Gu and {Jos\'e Antonio} {Iglesias Guiti\'an} and Bochang Moon},

title = {Neural James-Stein Combiner for Unbiased and Biased Renderings},

journal = {ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia 2022)},

year = {2022},

volume={41},

number={6},

pages={262:1-262:14},

doi = {}

}}

|

Acknowledgments

We appreciate the anonymous reviewers for the constructive comments.

We also thank the following authors and artists for each scene:

Mareck, SlykDragko, Wig42, NovaZeeke and thecali (training scenes in Fig. 9),

aXel (Glass-of-water), Cem Yuksel (Curly-hair), NewSee20135 (Staircase),

Ondřej Karlík (Pool), Tiziano Portenier (Bookshelf for the Mitsuba porting), and Christian Schüller (Dragon).

Bochang Moon is the corresponding author of the paper.

This work was supported by the National Research Foundation

of Korea (NRF) funded by the Korea government (MSIT) (No.

2020R1A2C4002425) and Ministry of Culture, Sports and Tourism

and Korea Creative Content Agency (No. R2021080001).

Jose A. Iglesias-Guitian was supported by a 2021 Leonardo Grant for Researchers

and Cultural Creators, BBVA Foundation. He also acknowledges the UDC-Inditex InTalent programme,

the Spanish Ministry of Science and Innovation (AEI/PID2020-115734RB-C22 and AEI/RYC2018-025385-I),

Xunta de Galicia (ED431F 2021/11) and EU-FEDER Galicia (ED431G 2019/01).