In Proceedings of IEEE VR 2024

| Javier Taibo 1 | Jose A. Iglesias-Guitian 1 | ||||

| 1 Universidade da Coruña, CITIC | |||||

Teaser

|

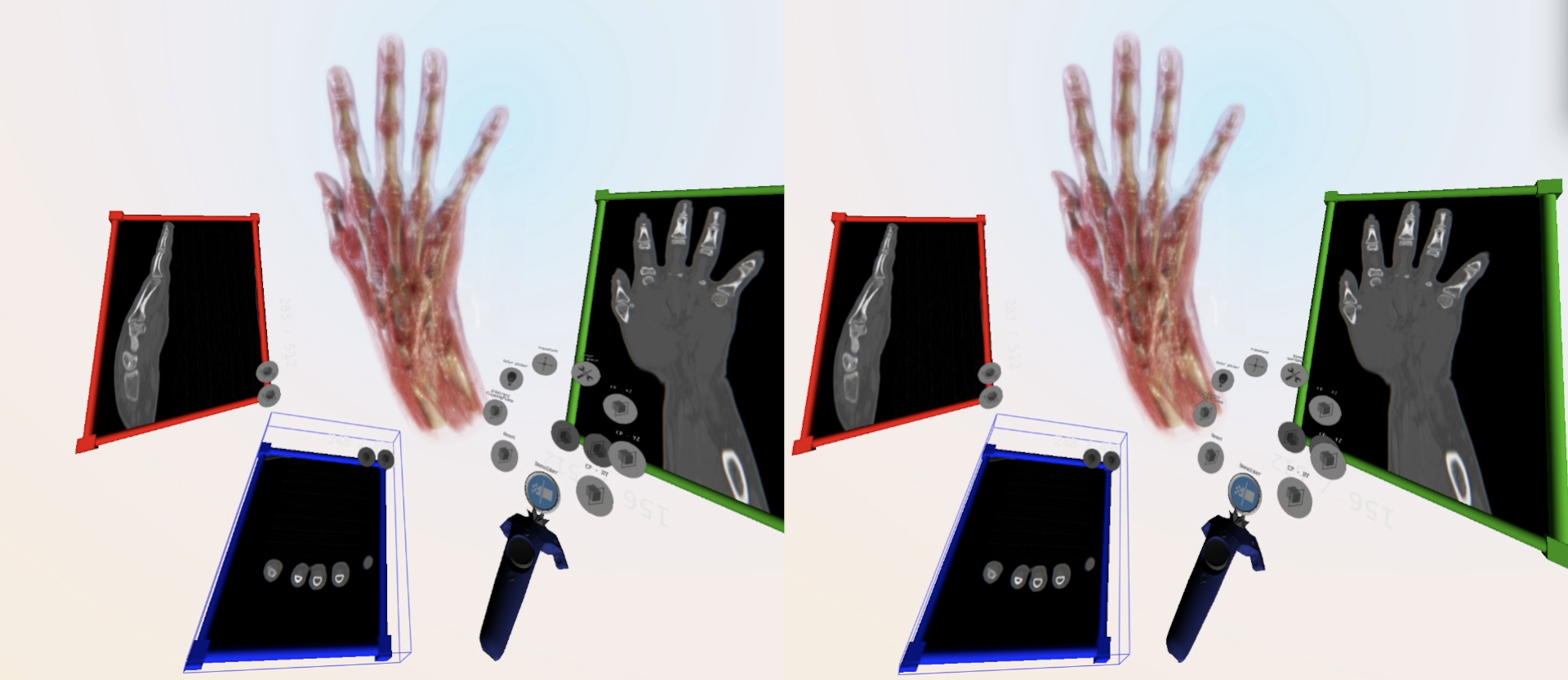

Stereoscopic interactive session rendering a photorealistic 3D reconstruction of the HAND CT scan. This paper presented an immersive 3D medical visualization system enabling VPT for stereoscopic virtual reality. Our approach combined stereoscopic rendering with state-of-the-art image reprojection and denoising techniques to meet the requirements of VR experiences. |

Abstract

| Scientific visualizations using physically-based lighting models play a crucial role in enhancing both image quality and realism. In the domain of medical visualization, this trend has gained significant traction under the term cinematic rendering (CR). It enables the creation of 3D photorealistic reconstructions from medical data, offering great potential for aiding healthcare professionals in the analysis and study of volumetric datasets. However, the adoption of such advanced rendering for immersive virtual reality (VR) faces two main limitations related to their high computational demands. First, these techniques are frequently used to produce pre-recorded videos and offline content, thereby restricting interactivity to predefined volume appearance and lighting settings. Second, when deployed in head-tracked VR environments they can induce cybersickness symptoms due to the disturbing flicker caused by noisy Monte Carlo renderings. Consequently, the scope for meaningful interactive operations is constrained in this modality, in contrast with the versatile capabilities of classical direct volume rendering (DVR). In this work, we introduce an immersive 3D medical visualization system capable of producing photorealistic and fully interactive stereoscopic visualizations on head-mounted display (HMD) devices. Our approach extends previous linear regression denoising to enable real-time stereoscopic cinematic rendering within AR/VR settings. We demonstrate the capabilities of the resulting VR system, like its interactive rendering, appearance and transfer function editing. |

Video (Interactive Live-Sessions)

Video (Temporal Flicker Comparisons)

Contents

- Paper [PDF (9,89 MB)]

- Publisher's official version (coming soon)

- Video (Interactive Live-Recorded Sessions) [YouTube]

- Video (Temporal Flicker Comparisons) [YouTube]

Copyright Disclaimer

This is the author's version of the work. It is posted here for your personal use. Not for redistribution. © 2024 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works.Citation (Bibtex)

Related Work

|

Real-Time Denoising of Volumetric Path Tracing for Direct Volume Rendering

Jose A. Iglesias Guitian, Prajita Mane, Bochang Moon IEEE Transactions on Visualization and Computer Graphics, 28 (7), pp 2734-2747, 2022 [PDF] [Publisher's official version] [Project page] [Bibtex] |

|

Real-time GPU-accelerated Out-of-Core Rendering and Light-field Display Visualization for Improved Massive Volume Understanding

Jose A. Iglesias Guitian Ph.D. Thesis, 7th March, 2011 [PDF][Project page] [Bibtex] |