In IEEE Transactions on Visualization and Computer Graphics (TVCG)

| Jose A. Iglesias-Guitian 1, 2 | Prajita Mane 3 | Bochang Moon 3 | |||

|

1 Centre de Visiò per Computador, Universitat Autònoma de Barcelona

2 Universidade da Coruña, CITIC 3 GIST | |||||

Teaser

|

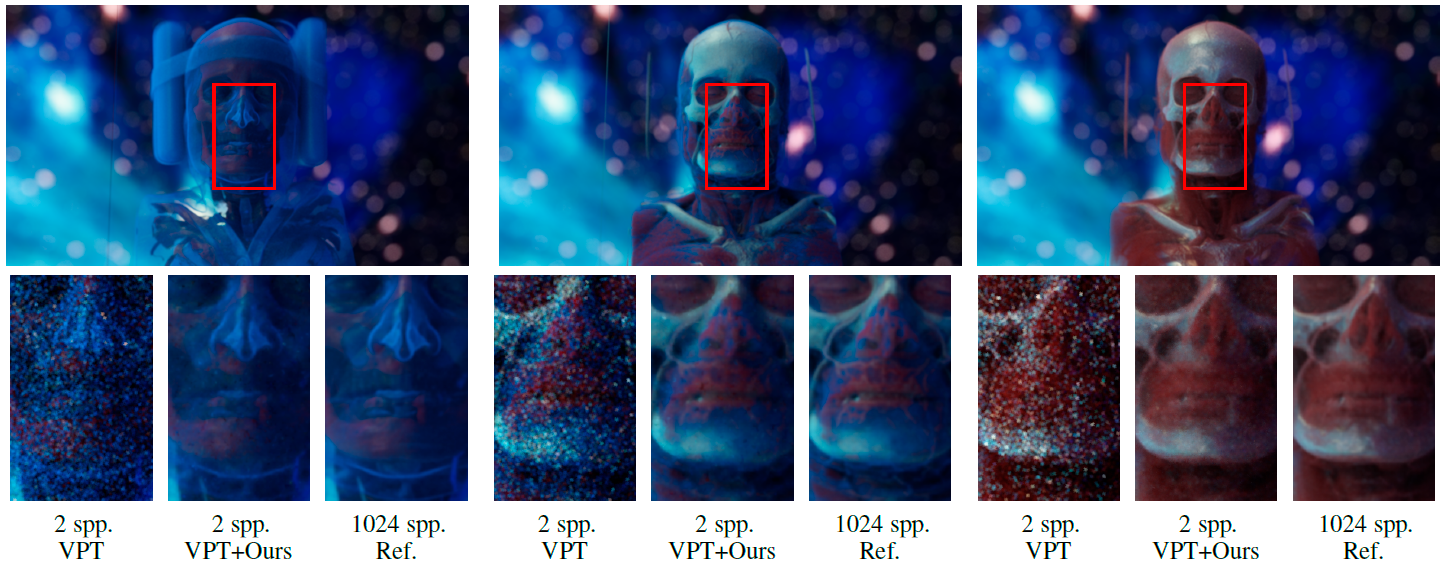

VPT results generated using the same source volume but during the interactive manipulation of different DVR transfer functions. Multiple scattering bounces per ray are simulated. Our real-time denoising improves VPT images (MC-DVR with only 2 spp) while reducing its noise effectively. Offline VPT with 1024 spp, taking minutes to produce a single image, is shown as reference. |

Abstract

| Direct Volume Rendering (DVR) using Volumetric Path Tracing (VPT) is a scientific visualization technique that simulates light transport with objects' matter using physically-based lighting models. Monte Carlo (MC) path tracing is often used with surface models, yet its application for volumetric models is difficult due to the complexity of integrating MC light-paths in volumetric media with none or smooth material boundaries. Moreover, auxiliary geometry-buffers (G-buffers) produced for volumes are typically very noisy, failing to guide image denoisers relying on that information to preserve image details. This makes existing real-time denoisers, which take noise-free G-buffers as their input, less effective when denoising VPT images. We propose the necessary modifications to an image-based denoiser previously used when rendering surface models, and demonstrate effective denoising of VPT images. In particular, our denoising exploits temporal coherence between frames, without relying on noise-free G-buffers, which has been a common assumption of existing denoisers for surface-models. Our technique preserves high-frequency details through a weighted recursive least squares that handles heterogeneous noise for volumetric models. We show for various real data sets that our method improves the visual fidelity and temporal stability of VPT during classic DVR operations such as camera movements, modifications of the light sources, and editions to the volume transfer function. |

Video (Interactive Live-Sessions)

Video (Benchmark Comparisons)

Contents

- Paper [PDF (120 MB)] [PDF (19.3 MB)]

- Supplementary Material [PDF (100 MB)] [PDF (11.8 MB)]

- Supplementary Results Suite [WWW]

- Video (Interactive Live-Sessions) [YouTube] [High-Quality Video (341 MB)]

- Video (Benchmark Comparisons) [YouTube] [High-Quality Video (350 MB)]

- Publisher's official version [DOI]

Copyright Disclaimer

This is the author's version of the work. It is posted here for your personal use. Not for redistribution. © 2020 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works. Digital Object Identifier no. 10.1109/TVCG.2020.3037680Citation (Bibtex)

Related Work

|

Immersive 3D Medical Visualization in Virtual Reality using Stereoscopic Volumetric Path Tracing

Javier Taibo and Jose A. Iglesias Guitian Proceedings of IEEE VR 2024, pp 1044-1053, 2024 [PDF] [Publisher's official version] [Project page] [Bibtex] |

|

Pixel History Linear Models for Real-Time Temporal Filtering

Jose A. Iglesias Guitian, Bochang Moon, Charalampos Koniaris, Eric Smolikowski and Kenny Mitchell Computer Graphics Forum, 35 (7), pp 363-372. Proc. Pacific Graphics 2016. [PDF] [Video] [High-Quality Video (499 MB)] [Project page] [Bibtex] |

|

Adaptive Rendering with Linear Predictions

Bochang Moon, Jose A. Iglesias Guitian, Sung-Eui Yoon, Kenny Mitchell ACM Transactions on Graphics (TOG), vol. 34, no. 4, 121 2015. Proc. SIGGRAPH 2015. [PDF] [Supplemental Material] [Project page] [Bibtex] |

|

Real-time GPU-accelerated Out-of-Core Rendering and Light-field Display Visualization for Improved Massive Volume Understanding

Jose A. Iglesias Guitian Ph.D. Thesis, 7th March, 2011 [PDF][Project page] [Bibtex] |